Shortly before I checked out for the holidays, I had the pleasure to give a keynote at SDI Summit in Santa Clara, CA. The name might suggest an event all about software (SDI = software-defined infrastructure) but, in fact, the event had a pretty strong hardware flavor. The organizers, Conference Concepts, put on events like the Flash Memory Summit.

As a result, I ended up having a lot more hardware-related discussions than I usually do at the events I attend. This included catching up with various industry analysts who I’ve known since the days I was an analyst myself and spent a lot of time looking at server hardware designs and the like. In any case, some of this back and forth started to crystallize some of my thoughts around how the server hardware landscape could start changing. Some of this is still rather speculative. However, my basic thesis is that software people are probably going to start thinking more about the platforms they’re running on rather than taking for granted that they’re racks of dual-socket x86 boxes. Boom. Done.

What follows are some of the trends/changes I think are worth keeping an eye on.

CMOS scaling limits

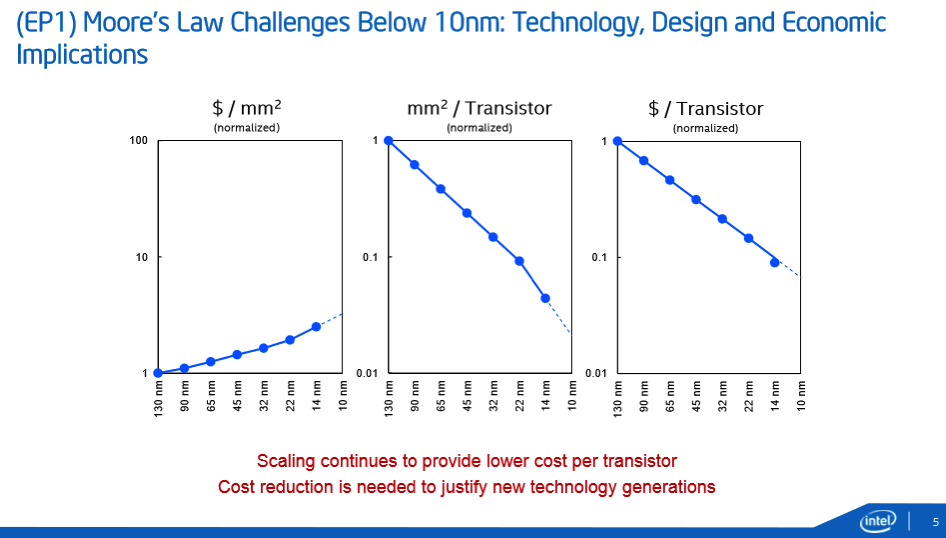

If this were a headline, it would probably be titled “The End of Moore’s Law,” but I’m not looking for the clicks. This is a complicated subject that I’m not going to be able to give its appropriate due here. However, it’s at the root of some other things I want to cover.

Intel is shipping 14nm processors today (Broadwell and Skylake). It’s slipped the 10nm Cannonlake out to the second half of 2017. From there things get increasingly speculative: 7nm, 5nm, maybe 3nm.

There are various paths forward to pack in more transistors. It seems as if there’s a consensus developing around 3D stacking and other packaging improvements as a good near-ish term bet. Improved interconnects between chips is likely another area of interest. For a good read, I point you to Robert Colwell, presenting at Hot Chips in 2013 when he was Director of the Microsystems Technology Office at DARPA.

However, Colwell also points out that from 1980 to 2010, clocks improved 3500X and micro architectural and other improvements contributed about another 50X performance boost. The process shrink marvel expressed by Moore’s Law (Observation) has overshadowed just about everything else. This is not to belittle in any way all the hard and smart engineering work that went into getting CMOS process technology to the point where it is today. But understand that CMOS has been a very special unicorn and an equivalent CMOS 2.0 isn’t likely to pop into existence anytime soon.

Moore’s Law trumped all

There are doubtless macro effects stemming from processors not getting faster or memory not getting denser (at least as quickly as in the past), but I’m going to keep this focused on how this change could affect server designs.

When I was an analyst, we took lots of calls from vendors wanting to discuss their products. Some were looking for advice. Others just wanted us to write about them. In any case, we saw a fair number of specialty processors. Many were designed around some sort of massive-number-of-cores concept. At the time (roughly back half of the 2000s), there was a lot of interest in thread-level parallelism. Furthermore, fabs like TSMC were a good option for hardware startups wanting to design chips without having to manufacture them.

Almost universally, these startups didn’t make it. Part of it is just that, well, most startups don’t make it and the capital requirements for even fabless custom hardware are relatively high. However, there was also a pattern.

Even in the best case, these companies were fighting a relentless doubling of processor speed every 18 to 24 months from Intel (and sometimes AMD) on the back of enormous volume. So these companies didn’t just need to have a more optimized design than x86. They needed to be so much better that they could overcome the aforementioned x86 inertia while competing, on much lower volume, against someone improving at a rapid predictable space. It was a tough equation.

I saw lots of other specialty designs too. FPGAs, GPU computing, special interconnect designs. Some of this has found takers in high performance computing, which as always been more willing to embrace the unusual in search of speed. However, in the main, the fact that Moore’s Law was going to correct any performance shortcomings in a generation or two made sticking with mainstream x86 an attractive default.

The rise of specialists

In Coswell’s aforementioned presentation, he argues that the “end of Moore’s Law revives special purpose designs.” (He adds the caveat to heed the lessons of the past and not to design unprogrammable engines.) Intel’s recent $16.7 billion acquisition of Altera can be seen as part of transition to a world in which we see more special purpose chips. As the linked WSJ article notes: "Microsoft and others, seeking faster performance for tasks like Web searches, have experimented with augmenting Intel’s processors with the kind of chips sold by Altera, known as FPGAs, or field programmable gate arrays. Intel’s first product priority after closing the Altera deal is to extend that concept."

Of course, CPUs have long been complemented by other types of processors for functions like networking and storage. However, the software-defined trend has been at least somewhat predicated on moving away from specialty hardware toward a standardized programmable substrate. (So, yes, there’s some irony in discussing these topics at an SDI Summit.)

I suspect that it’s just a tradeoff that we’ll have to live with. Some areas of acceleration will probably standardize and possibly even be folded into CPUs. Other types of specialty hardware will be used only when the performance benefits are compelling enough for a given application to be worth the additional effort. It’s also worth noting that the increased use of open source software means that end-user companies have far more options to modify applications and other code to use specialized hardware than when they were limited to proprietary vendors.

ARM AArch64

Flagging ARM as another example of potential specialization is something of a no-brainer even if the degree and timing of the impact is TBD. ARM is clearly playing a big part in mobile. But there are reasons to think it may have a bigger role on servers than in the past. That it now supports 64-bit is huge because that's table stakes for most server designs today. However, almost as important, is that ARM vendors have been working to agree on certain standards.

As my colleague Jon Masters wrote when we released Red Hat Enterprise Linux Server for ARM Development Preview: "RHELSA DP targets industry standards that we have helped to drive for the past few years, including the ARM SBSA (Server Base System Architecture), and the ARM SBBR (Server Base Boot Requirements). These will collectively allow for a single 64-bit ARM Enterprise server Operating System image that supports the full range of compliant systems out of the box (as well as many future systems that have yet to be released through minor driver updates). “ (Press release.)

There are counter-arguments. x86 has a lot of inertia even if some of the contributors to that inertia like proprietary packaged software are less universally important than they were. And there’s lots of wreckage associated with past reduced-power servers both using ARM (Calxeda) and x86-compatible (Transmeta) designs.

But I’m certainly willing to entertain the argument that AArch64 is at least interesting for some segments in a way that past alternatives weren’t.

Parting thoughts

In the keynote I gave at SDI Summit, The New Distributed Application Infrastructure, I argued that we’re in a period of rapid transition from a longtime model built around long-lived applications installed in operating systems to one in which applications are far more componentized, abstracted, and dynamic. The hardware? Necessary but essentially commoditized.

That’s a fine starting point to think about where software-defined infrastructure is going. But I increasingly suspect that makes a simplifying assumption that increasingly won’t be the case. The operating system will help to abstract away changes and specializations in the hardware foundation as it has in the past. But that foundation will have to adjust to a reality that can’t depend on CMOS scaling to advance.

No comments:

Post a Comment