- Get ready for your next-generation cloud: lessons learned from first-generation private clouds - BMC Blogs

- John McAfee In Crazytown | TechCrunch - "Hence McAfee, and his warm reception here. He’s an archetype of Def Con’s collective ethos, both good and bad, a cinematically awesome renegade / outlaw / trickster figure. And he’s also living proof, symbolically, that you can make your mark in the world, both technically and with a successful and famous startup, without selling out and turning corporate in any way; that you can accept everything life may offer and still remain fundamentally uncorrupted."

- Seth's Blog: Understanding substitutes

- The creator of Godwin’s Law on the inevitability of online Nazi analogies and the ‘right to be forgotten’ - The Washington Post - "Well, the thing that newspaper publishers know is that once something is out in the streets, it's very hard to go and get it back. Professional journalists have always known that you can't get the newspaper or the magazine back very easily. The traditional media have a more refined awareness of the lack of control over their content once it's out there. But for people who weren't in professional journalism, they haven't had to grapple with it. If they were writing stuff every day, it was probably in a diary that they kept in a drawer in their room."

- The other dude in the car | ROUGH TYPE - RT @derrickharris: Good take on the human aspect of the sharing economy and automation, via @roughtype: The other dude in the car

- Almost Perfect by W. E. Pete Peterson The Rise and Fall of WordPerfect Corporation

- AnandTech | Intel Broadwell Architecture Preview: A Glimpse into Core M - Sort of miss that I don't spend much time with hardware these days. Broadwell overview:

- Migrating to Cloud Native with Microservices - RT @adrianco: Video of my June QCon NY "Migrating to Cloud Native with Microservices" talk was just released

- Typing Errors - Reason.com

- TIME - Breaking News, Analysis, Politics, Blogs, News Photos, Video, Tech Reviews - RT @timbray: By me, at : ”Why We Might Be Stuck With Passwords for a While”

Tuesday, August 12, 2014

Links for 08-12-2014

Thursday, August 07, 2014

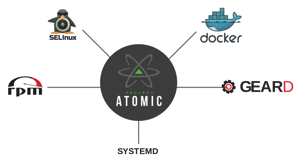

Why the OS matters (even more) in a containerized world

My former colleague (and frequent host for good beer at events) Stephen O’Grady of RedMonk has written a typically smart piece titled “What is the Atomic Unit of Computing?” which makes some important points.

However, on one particular point I’d like to share a somewhat different perspective in the context of my cloud work at Red Hat. He makes that point when he writes: "Perhaps more importantly, however, there are two larger industry shifts at work which ease the adoption of container technologies… More specific to containers specifically, however, is the steady erosion in the importance of the operating system."

It’s not the operating system that’s becoming less important even as it continues to evolve. It’s the individual operating system instance that’s been configured, tuned, integrated, and ultimately married to a single application that is becoming less so.

First of all, let me say that any differences in perspective are probably in part a matter of semantics and perspective. For example, Stephen goes on to write about how PaaS abstracts the application from the operating system running underneath. No quibbles there. There is absolutely an ongoing abstraction of the operating system; we’re moving away from the handcrafted and hardcoded operating instances that accompanied each application instance—just as we previously moved away from operating system instances lovingly crafted for each individual server. Stephen goes on to write—and I also fully agree—that "If applications are heavily operating system dependent and you run a mix of operating systems, containers will be problematic.” Clearly one of the trends that makes containers interesting today in a way that they were not (beyond a niche) a decade ago is the wholesale shift from pet operating systems to cattle operating systems.

But—and here’s where I take some exception to the “erosion in the importance” phrase—the operating system is still there and it’s still providing the framework for all the containers sitting above it. In the case of a containerized operating system, the OS arguably plays an even greater role than in the case of hardware server virtualization where that host was a hypervisor. (Of course, in the case of KVM for example, the hypervisor makes use of the OS for the OS-like functions that it needs, but there’s nothing inherent in the hypervisor architecture requiring that.)

In other words, the operating system matters more than ever. It’s just that you’re using a standard base image across all of your applications rather than taking that standard base image and tweaking it for each individual one. All the security hardening, performance tuning, reliability engineering, and certifications that apply to the virtualized world still apply in the containerized one.

To Stephen's broader point, we’re moving toward an architecture in which (the minimum set of) dependencies are packaged with the application rather than bundled as part of a complete operating system image. We’re also moving toward a future in which the OS explicitly deals with multi-host applications, serving as an orchestrator and scheduler for them. This includes modeling the app across multiple hosts and containers and providing the services and APIs to place the apps onto the appropriate resources.

Project Atomic is a community for the technology behind optimized container hosts; it is also designed to feed requirements back into the respective upstream communities. By leaving the downstream release of Atomic Hosts to the Fedora community, CentOS community and Red Hat, Project Atomic can focus on driving technology innovation. This strategy encompasses containerized application delivery for the open hybrid cloud, including portability across bare metal systems, virtual machines and private and public clouds. Related is Red Hat's recently announced collaboration with Kubernetes to orchestrate Docker containers at scale.

I note at this point that the general concept of portably packaging applications is nothing particularly new. Throughout the aughts, as an industry analyst I spent a fair bit of time writing research notes about the various virtualization and partitioning technologies available at the time. One such set of techs was “application virtualization.” The term governed a fair bit of ground but included products such as one from Trigence which dealt with the problem of conflicting libraries in Windows apps (“DLL hell” if you recall). As a category, application virtualization remained something of a niche but it’s been re-imagined of late.

On the client, application virtualization has effectively been reborn as the app store as I wrote about in 2012. And today, Docker in particular is effectively layering on top of operating system virtualization (aka containers) to create something which looks an awful lot like what application virtualization was intended to accomplish. As my colleague Matt Hicks writes:

Docker is a Linux Container technology that introduced a well thought-out API for interacting with containers and a layered image format that defined how to introduce content into a container. It is an impressive combination and an open source ecosystem building around both the images and the Docker API. With Docker, developers now have an easy way to leverage a vast and growing amount of technology runtimes for their applications. A simple 'docker pull' and they can be running a Java stack, Ruby stack or Python stack very quickly.

There are other pieces as well. Today, OpenShift (Red Hat’s PaaS) applications run across multiple containers, distributed across different container hosts. As we began integrating OpenShift with Docker, the OpenShift Origin GearD project was created to tackle issues like Docker container wiring, orchestration and management via systems. Kubernetes builds on this work as described earlier.

Add it all together and applications become much more adaptable, much more mobile, much more distributed, and much more lightweight. But they’re still running on something. And that something is an operating system.

[Update: 8-14-2014. Updated and clarified the description of Project Atomic and its relationship to Linux distributions.]

Tuesday, August 05, 2014

Podcast: OpenShift Origin v4 & Accelerators with Dianne Mueller

Links:

Listen to MP3 (0:11:09)

Listen to OGG (0:11:09)

[Transcript:]

Links for 08-05-2014

- DevOps Isn't Killing Developers – But it is Killing Development and Developer Productivity | Javalobby - "But Devops is killing development, or the way that most of us think of how we are supposed to build and deliver software. Agile loaded the gun. Devops is pulling the trigger."

- How Developers and IT Think Differently about Security -- and Why It Matters » Druva

- Thomas Schelling interview

- The Wisdom of Crowds: Chapter Five, Part III - I find the focal points idea quite fascinating for some reason.

- 'Halt and Catch Fire' Season Finale Recap: Where There's Smoke… | Rolling Stone - Nice recap and analysis of the show.

- Review: Parrot’s Palm-Sized Copter Is Your $100 Cure for Drone Envy - Personal Tech News - WSJ - The new Parrot drone looks interesting. This is closer to my "fool around with" price range

Friday, August 01, 2014

Links for 08-01-2014

- Mark Burgess Website - The Gamesters of Transmogrification - "The APIzation of things, and the Software Defined Everything (SDx) movements worry me. These philosophies seem to say here's a bunch of complex tools that you might need, which are going to take you a while to learn, and which could get you into trouble if you use them wrong. The responsibility is yours to learn how to use this well, after all, with great power comes great responsibility. But this is the future, so get with the program! Best of luck."

- Untitled (http://www.xconomy.com/boston/2014/07/31/ma-wont-change-noncompete-law-vcs-pledge-to-continue-campaign/?utm_source=feedly&utm_reader=feedly&utm_medium=rss&utm_campaign=ma-wont-change-noncompete-law-vcs-pledge-to-continue-campaign) - Skepticism this would happen is unfortunately confirmed. Mass not moving ahead w restricting non-competes

- Kodak Movie Film, at Death's Door, Gets a Reprieve - WSJ - "Kodak's motion-picture film sales have plummeted 96% since 2006, from 12.4 billion linear feet to an estimated 449 million this year."

- PC sales estimates: How the sausage gets made

- ongoing by Tim Bray · Privacy Economics - "But opportunistic privacy is better than none. A strong password is better than a weak one. A password manager is better than your memory. A second factor is better than just a password. An encrypted disk is better than a wide-open one. None of these things buy you anything absolute. But every time the dial turns, certain bad things stop happening, and the world becomes a better place."

- Reveal.js | OpenShift by Red Hat

- The United Names of AmericaThe United Names of America - Not what I would have thought--though I'm probably influenced by the ubiquity of "Main Street" in New England towns.

- ownCloud | OpenShift by Red Hat - RT @fabianofranz: #ownCloud 7 is out and already available on @OpenShift! Deploy your own cloud now:

- A Presentation Toolbox That Might Blow Your Audience Away: Florian Haas at OSCON 2014

Podcast: Software-defined Networking with Red Hat's Dave Neary

Listen to MP3 (0:15:34)

Listen to OGG (0:15:34)

[Transcript:]