- Thinking About A Post-‘House Of Cards’ Netflix World - SplatF

- Cloud computing: Supply chain issues

- As Its 'Ideas Worth Spreading' Talks Push Into the Classroom, It's Time to Reject the Cult of TED – Tablet Magazine - A reasonable example of the TED backlash.

- Red Hat OpenShift Enterprise Use Case Animation - YouTube - Nice short animation introducing #openshift Enterprise

- Free mobile device policy template examples for CIOs - RT @AndiMann: RT @RachelatTT: A1 Speaking of #BYOD policies, we rounded up #mobile device policy templates: #CIOC ...

- Finding and Making Sense of Geospatial Data on the Internet | OpenShift by Red Hat - Cool post by @TheSteve0 on geospatial data on the Internet.

- Pushing back against licensing and the permission culture – Luis Villa

- Red Hat | OpenShift and OpenStack: A Match Made in the Cloud - .@asheshbadani discusses how OpenShift and OpenStack work together

- Red Hat | Red Hat and IBM Achieve Leading Performance Benchmark Results - TPC-C is a very I/O intensive benchmark (hard on virtualization). RHEL/KVM still 88% of bare metal pert.

- How Cloud Computing Is Redefining the M&A Landscape - Forbes

- Cloud Foundry, Forking and the Future of Permissively Licensed Open Source Platforms – tecosystems - RT @sogrady: new post | "Cloud Foundry, Forking and the Future of Permissively Licensed Open Source Platforms":

- 70% of 'Private Clouds' aren't Really Clouds at all - CIO.com - RT @AndiMann: 70% of Private Clouds Not Really #Cloud @BButlerNWW via @CIOonline #CIO < But do semantics matte ...

Thursday, February 28, 2013

Links for 02-28-2013

Why OpenShift has polyglot baked in

Another day, another language. Yesterday, another PaaS provider announced they were adding additional language support to their PaaS—in this case, supplementing their initial .NET PaaS with Java. Such moves have become something of a pattern. Many of the initial hosted PaaS offerings were unabashedly monolingual.

Engine Yard began with a Ruby on Rails focus, but has since added PHP and node.js. Google App Engine initially supported a variant of Python but now does Java and Go too. (Go is a Google-developed language that aims to provide the efficiency of a statically typed compiled language with the ease of programming of a dynamic language.) AppFog recently discontinued their PHP-only PHPFog platform. Even Microsoft's .NET-centric Azure PaaS has added Java.

I can't say I'm surprised. Whenever Red Hat has conducted surveys about intended language use in the cloud--whether private, public, or hybrid--we've always seen a great deal of diversity in the answers. (As well as considerable correlation with the languages those taking the survey are currently using.) Given those facts, it seems unlikely that most enterprise development shops would be interested in adopting a service that limited them to a narrow set of languages or frameworks.

This isn't to say that enterprise software development is completely ungoverned. (Though sometimes it seems that way given the breadth of tools in use.) In fact, as I discussed previously, one of the big attractions of PaaS for enterprise architects is that it provides opportunities for standardizing development workflows and thereby make both the initial development of and subsequent lifecycle management of applications much more efficient. But, standardization notwithstanding, enterprise applications and infrastructure are heterogeneous. And that means a polyglot development environment is a must.

Which is the approach Red Hat has taken with OpenShift from the beginning—whether we're talking the OpenShift Online hosted service or the OpenShift Enterprise on-premise version. (The other thing we hear consistently is that many large organizations adopting PaaS want to run it on their own servers; application development is just too central a task for them to be comfortable running on a hosted service.)

OpenShift is fundamentally architected around choice. "Technologies" (language, databases, etc.) are delivered as cartridges--a pluggable capability you can add at any time. When you create an application, you start with a web platform cartridge to which you can add additional capabilities as you choose. Each cartridge runs on one or more "gears" (basically, a unit of OpenShift capacity) depending on how high your application has been scaled.

Major open source Web language choices are ready to grab and go: Java EE 6, PHP, Ruby, Perl, Python, and node.js. But you can also build your own cartridges. You can even connect cartridges together. For example, you could have a PHP cartridge in one gear and a MySQL cartridge in another gear. (We're in the process of rolling out a new cartridge design to make building cartridges easier.)

This ability to extend OpenShift is an important architectural feature that dovetails right into the open source development model and leverages the power of the community. And it's not an afterthought. It's in OpenShift's DNA.

Wednesday, February 27, 2013

PaaS isn't just for developers

Most of the attention focused on Platform-as-a-Service, PaaS, is on its impact on developers. That's understandable. After all, developers are the ones "consuming" PaaS in order to create applications. In fact, as I've written about previously, Eric Knipp of Gartner goes so far as to call today "a golden age of enterprise application development"—in no small part, because of PaaS. Developer productivity is incredibly important, given that businesses large and small depend on information technology more than even. And, while much of that IT can and should come from pre-packaged software and services, plenty needs to be customized and adapted for a given business, industry, and customer set.

As, my Red Hat colleague Joe Fernandes discussed in a recent podcast:

For developers, Platform‑as‑a‑Service is all about bringing greater agility and giving them a greater degree of self‑service, really removing IT as the bottleneck to getting things done. In public PaaS services like OpenShift, developers can come and instantly begin deploying applications. They can choose from a variety of languages and frameworks and other services like databases and so forth. And they don't need to wait for systems to be provisioned and software to be configured. The platform is all there waiting for them, so they can be productive much more quickly. And really, what that means is that they can focus on what matters most to them, which is really their application code. They can iterate on their designs and really see the applications up and running without having to worry about how to manage what's running underneath.

But, as Joe also discussed, PaaS isn't just for developers. As we start to inject Platform-as-a-Service into enterprise development environments—often in the form of an on-premise product such as OpenShift Enterprise--it helps system administrators and system architects too.

Consider first the IT operations teams, the "admins" in the vernacular. They're tasked with supporting developers. They're the ones who have historically had the deal with the help desk tickets filed to request new infrastructure for a project. They're also the ones who get bombarded with increasingly irate questions about why the new server hasn't been installed and provisioned yet. Of course, virtualization and virtualization management has helped to some degree but they've generally reduced the internal friction of the process, rather than fundamentally changed it.

A PaaS on the other hand, allows admins to focus up-front on basic policies (such as whether to use a public hosted service or to deploy in-house) and to work with developers on defining which standardized environments they need. At that point, self-service and automation (under policy) can largely take over. The "machinery" can scale the apps, deliver new development instances, isolate workloads, and spin down unused resources—all without much ongoing involvement by the admins.

Of course, if it's an on-premise environment, they'll still need to manage the underlying infrastructure but that's the price on having more direct control and visibility than with a public shared service. An IT operations team has to manage this infrastructure efficiently and securely. PaaS can help with that too. For example, OpenShift goes beyond server virtualization by introducing the concept of multi‑tenancy within a virtual machine using a combination of performance and security features built into Red Hat Enterprise Linux.

As for enterprise architects, they're are trying to marry the IT infrastructure, IT operations, and application development methodologies to the needs of the business. So they have to understand where the business is going and how IT is going to architect their infrastructure, their applications, and their processes to address those needs. This in the face of tremendous growth in demand from the business for new services, new back-end applications, new mobile applications, new web services and more. It falls on enterprise architects to help figure all this out.

One way PaaS helps is that it lets them standardize the developer work flows, that is the process that IT needs to go through every time that a developer starts on a new project. Get them provisioned with the infrastructure they need, with the software they need, so that they can start either developing or doing testing or performance testing, or even deploying those applications all the way through to production. The result is not only faster application development but less fragile and error-prone application architectures—attributes that are especially important as we move toward more modular and loosely couple software.

As Joe put it to me:

You're never going to eliminate the role of the IT operations team in an enterprise context. What you need to do is figure out how the operations team can work more effectively with the development side of the house to meet the needs of the business. To me, it's not dev or ops. It's really both. The developers aren't going to take over the job that the IT operations team does any less than the IT operations team is going to be able to build and deploy their own applications and so forth.

The question is, how do both sides work more effectively together? How do they reduce friction and really help accelerate time to market? Because, ultimately, that's all the business cares about. Business cares about when they can get their new service and how quickly they can start leveraging that, whether it's an internal or external application that they're looking for, and it's incumbent on IT organizations, operations team, as well as developers, to help figure that out. That's really what we're trying to do with Platform‑as‑a‑Service: drive that process forward.

Tuesday, February 26, 2013

Links for 02-26-2013

- Sadly, this is not The Onion

- Fear of lock-in dampens cloud adoption — Tech News and Analysis - "It’s a fact of life: Cloud vendors have a vested interest in making it drop-dead simple and cheap to put your data on their respective clouds. If you don’t believe that just witness the price war that Amazon, Google and Microsoft are waging on cloud storage. Those vendors obviously hope once your data is in their grasp, they can up-sell you on pricier higher-level services. And, they don’t necessarily see the value in making the return trip so easy and that’s what has people spooked."

- Twitter / davidegts: "6 Reasons to Pay for #OpenSource ... - RT @FOSSwiki: RT @davidegts: "6 Reasons to Pay for #OpenSource Software" featuring @ghaff

- Why Americans Are the Weirdest People in the World

- Why Your Enterprise Private Cloud is Failing | Forrester Blogs - "Bottom line: Your private cloud is very different than your static virtualziation environment."

- Making a hybrid cloud model a reality in enterprise IT - "The ability to automatically migrate between private and public clouds is the promise, but that technology is emerging and comes with some tradeoffs. Perhaps this is why we don't see much hybrid cloud computing -- but we do see the promise."

- Reflections of a Newsosaur: Why traditional publishers won’t buy Globe

- Can Barnes and Noble Be Saved? - The Daily Beast - "If I were Mr. Riggio, I'd go back and try to reconceptualize the bookstore from scratch, starting small and expanding as needed, rather than trying to salvage the brilliant idea of three decades ago. On the other hand, I don't have a successful company to save."

- Hotmail Shame: The Digital World’s Social Pressures And Netiquette Dilemmas | WBUR - "When you receive an email from one of those services, “You’re like, ‘Oh, this is adorable! This is someone’s aunt — that’s nice!’ ” says Steve Macone, a stand-up comedian and freelance writer. “In a few years it will probably be a vintage thing. I’m sure everyone in Williamsburg, Brooklyn, has @AOL email addresses ironically that they try to use.”"

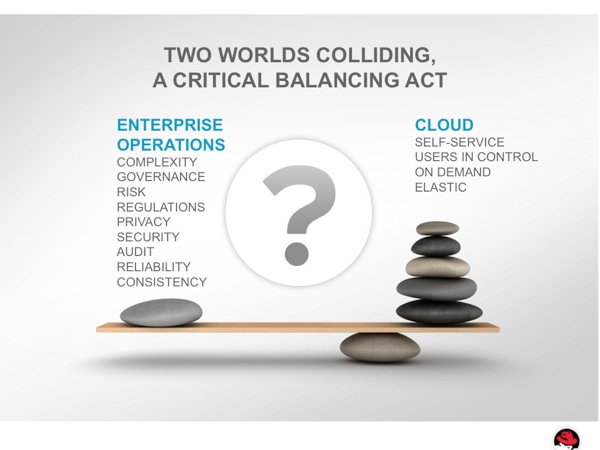

Balancing the desires of users with the needs of enterprise IT

Forrester's James Staten has a typically smart blog post up called "Why your enterprise private cloud is failing." It's based on "The Rise Of The New Cloud Admin," a report that James co-wrote with Lauren Nelson. James writes:

You're asking the wrong people to build the solution. You aren't giving them clear enough direction on what they should build. You aren't helping them understand how this new service should operate or how it will affect their career and value to the organization. And more often than not you are building the private cloud without engaging the buyers who will consume this cloud.

And your approach is perfectly logical. For many of us in IT, we see a private cloud as an extension of our investments in virtualization. It's simply virtualization with some standardization, automation, a portal and an image library isn't it? Yep. And a Porsche is just a Volkswagen with better engine, tires, suspension and seats. That's the fallacy in this thinking.

To get private cloud right you have to step away from the guts of the solution and start with the value proposition. From the point of view of the consumers of this service - your internal developers and business users.

The post and report do a great job of articulating why a private cloud isn't just an extension to virtualization. It may leverage virtualization and build on virtualization but the thinking and approach have more differences than may be readily apparent if you're just focused on the technology.

I think about it thusly. Even though it's about virtual rather than physical, the fundamental virtualization mindset is still about servers. Whereas, with cloud, that mindset should shift to delivering IT services to users. That's a big difference. (This shift is discussed in more detail in both Visible Ops Private Cloud: From Virtualization to Private Cloud in 4 Practical Steps by Andi Mann, Kurt Milne, and Jeanne Moraine and in my new book Computing Next: How the cloud opens the future.)

To make things a bit more concrete, here's another way of looking at the expectations for a private cloud.

Public clouds were initially largely a grassroots phenomenon. Users voted with their credit cards for IT resources delivered in minutes, not months. They voted for freedom from restrictions in the type of software they could run. They voted for easy-to-use interfaces and fewer roadblocks to developing new applications.

When an enterprise builds a private or a hybrid cloud, it needs to preserve the goodness that drove its users, often developers, to the cloud in the first place. It may well need to balance these desires with legitimate governance, consistency, compliance, and security requirements. But it has to do so without effectively throwing out the cloud operating model and going back to business as usual.

As RedMonk's Stephen O'Grady told me in a recent podcast:

If you're looking to reign in or at least gain some visibility into usage, you basically have two choices. You can try to say, "No, you can't do this and you can't use these tools." As I've said, that's an effort, in my opinion, doomed to failure in most cases. The alternative is to say, "I understand that there are reasons and very legitimate business reasons that you're doing what you're doing. I'm going to try to go along with that program as much as I can. In return for that, I want visibility into what's going on." In other words, trying to meet developers halfway and having them do the same.

This is where open, hybrid cloud management comes in. I'm going to discuss the components of this management, as implemented in Red Hat CloudForms and ManageIQ, in greater detail in an upcoming post. But, for our purposes here, open hybrid cloud management is fundamentally about balancing what users/developers want and what enterprises need. It's about offering the user experience of the public cloud within the policy framework of enterprise IT.

Sunday, February 24, 2013

Gordon Haff author interview: Computing Next

Decisions I made when publishing my new book

I spent most of yesterday making a final (hopefully) set of tweaks to my new cloud computing book—or at least final until such time as I decide substantial revisions (i.e., a new edition) are called for. As I'm sure everyone has experienced in their own way, getting to 100 percent (or as close to 100 percent as reality allows) always takes far more time than it seems as if it should. Especially when you are getting profoundly sick of the whole enterprise and just want it over with. I'm going to give everything a few days to settle but here's hoping that, once everything percolates through Amazon's system (they seem to use something of an "eventually consistent" model for their publishing platform as for other things), I'll be able to call the production end of things a wrap and feel confident promoting to a broader audience.

Given that, I thought I'd take the opportunity to do something of a postmortem, both in the interest of sharing possibly useful information and to document a few things for myself. This is, by no means, intended to be a definitive guide to publishing a book. Rather, it highlights things I learned in the course of this experiment.

As many of my readers know, by day I am Red Hat's cloud evangelist. Thus, this book was only a pseudo-personal project. I wrote much of it on my own time, but it leveraged a fair amount of material I had previously written for one outlet or another (blog posts and the like), as well as material others wrote and which they gave me permission to use. My goal was to pull together my thinking on cloud computing and related trends within this context. It wasn't and isn't really focused on profit.

Up-front decisions:

Publisher. I decided to publish through the Amazon CreateSpace publishing platform. To some degree, this decision came about from following the path of least resistance. The timeline for this project expanded and contracted based on a variety of external factors as I rethought various topics. Some of my thinking about the best way to approach certain aspects of the book also morphed. Certainly a publisher could potentially have helped me through some of these questions. At the same time, they'd also likely impose constraints, based on marketability which, as I've indicated, wasn't a top priority for me. At the end of the day, I felt comfortable tackling the project on my own and it just seemed easiest that way. (At one point, we did consider making this an "official" Red Hat project, but that didn't come to fruition for a variety of reasons.)

Length. My initial thinking was that my book should be a "normal" length, which my research suggested was somewhere around 60,000 words. I've come to think that, while there are reasons to have the heft of a typical book, it's not really necessary—at least in the context in which I was working. I recently wrote a post about how short books are more practical today than in the past. That said, although I trimmed some material that I came to see as filler, I added other material. And I rather liked the "guest posts" others let me use even if they were partly there initially to pad things out.

Organization. One of my colleagues, Margaret Rimmler, suggested the idea of short chapters based on the look of Jeremy Gutsche's Exploiting Chaos. That basic concept fit well with leveraging existing relatively short (1,000 word or so) blog posts and the like. The chapters in my Computing Next are longer and tone is considerably different but I did ultimately stick with the idea of having chapters that are relatively standalone.

Format. Where I broke considerably from Exploiting Chaos' look and feel was in my approach to graphics. I initially was headed down the road of having a graphically rich book with lots of full-bleed photographs and the like. However, I came to rethink this approach. For one thing, I realized it was going to create quite a bit of incremental work and cost; I'd have to use a full desktop publishing program like InDesign or Scribus (with which I had just about zero familiarity in both cases) and I'd need to print the book in full color. For another, much of the work would be irrelevant to the Kindle version. In the end, I decided to primarily just include graphics that were directly relevant to the book's content.

Footnotes. I struggled with this one a bit. I really like using footnotes in my writing. Not so much for the purposes of exhaustively citing sources, but as a way to provide additional background or context without breaking up the flow of the writing. Unfortunately, footnotes on a Kindle aren't ideal as they're essentially hyperlinked endnotes that tend to take you out of the flow more than a digression would. That said, I decided to just use footnotes anyway because it's what I'm used to.

Tools. With the book now primarily text, there was no particular benefit to working in a program that let me see that text as it would appear on the printed page. (I'd need to format it eventually, but the writing now didn't need to reflect layout considerations to any significant degree.) I ended up using Scrivener on my Mac. One of the really nice things about Scrivener is that it's very easy to work on, label, group, and rearrange individual chapters—a great match for the style of my book. Once I got to a mostly complete first draft, I exported the text into iWork Pages and then worked on it in that format for the balance of the project. In general, I find Pages is less annoying than Microsoft Word in a variety of ways although, as we'll see, I did ultimately export from Pages to Word in order to create the Kindle version.

Editing:

Several colleagues read through the manuscript with greater or lesser degrees of rigor. I did a front-to-back fine-tooth comb read after the manuscript was complete and integrated. Furthermore, a decent amount of the content had been previously edited in some form or other. In spite of all this, I decided to engage a copy editor (a former intern of a magazine editor acquaintance of mine).

Lots of corrections. To be sure, some of them were stylistic nitpicking but also corrections of no small number of grammatical errors and misspellings. I can't say I was really surprised, having been writing and being edited for many years. Past a point, you just start reading what you expect to read and not what's actually on the page. The lesson? You absolutely must have a copy editor do a thorough review. And, in general, even friends and acquaintances who are good writers mostly won't read an entire book with the care needed to really clean it up.

(I initially considered hiring someone who would be better equipped to edit for content, tone, flow, etc. but the couple possibilities I had in mind didn't pan out and I didn't really want to spend a lot more money.)

Cover:

The cover arguably makes less difference to Amazon purchases than it does in a book store. Nonetheless, you want something that looks professional. I downloaded a template from Amazon and worked on it in Adobe Photoshop Elements. (I'm certainly not a design professional, but I do have some design background and training.)

Kindle:

Creating a Kindle version wasn't as straightforward as I had hoped/expected. If you expect to just take your CreateSpace PDF and upload it to Kindle Direct Publishing and have life be good, you're probably going to be disappointed. I'll probably do a separate post on this, but I'll note here a few specific issues I had.

- Small inset photos won't display that way in the Kindle version. (These were head shots of the guest authors in the case of my book.) I ended up just taking out all of these photos, as well as photos in the section breaks that were just there for graphical interest.

- You may have to manually create page breaks.

- Depending upon the word processing program, you may have to manually change certain styles to be explicitly bold or italics as opposed to using a bold or italic font. (In other words, if a heading uses the Gill Sans MT Bold font rather than Gill Sans MT with a bold setting in the word processor, it won't display as bold on the Kindle. (It doesn't help that Word seems to make some of these substitutions on its own.)

- You may have to create a Table of Contents manually depending, seemingly, on the phase of the moon. You basically do so by inserting a "toc" (without the quotes) bookmark where you want the Table of Contents to be, inserting the text you want in the Table of Contents (without line numbers), and then creating a hyperlink for each line to a bookmark at the corresponding chapter. Yes, it's a pain in the neck. It's probably best tested by downloading the mobi file created when you upload your draft Kindle book to Amazon and opening it with a Kindle or Kindle app.

The good news is that, for many books, it doesn't seem as if you need to get all down and dirty with HTML or ePub code; you can just stick to your word processor. But, in my experience, you are going to have to adapt your the document created for the print edition to display nicely on a Kindle. (And it makes sense to think about the Kindle version as you're designing the book.)

Thursday, February 21, 2013

Links for 02-21-2013

- America's New Mandarins - The Daily Beast - "Passing the tests and becoming a "scholar official" was a ticket to a very good, very secure life. And there is something to like about a system like this . . . especially if you happen to be good at exams. Of course, once you gave the imperial bureaucracy a lot of power, and made entrance into said bureaucracy conditional on passing a tough exam, what you have is . . . a country run by people who think that being good at exams is the most important thing on earth. Sound familiar?"

- U.S. Ups Ante for Spying on Firms: China, Others Are Threatened With Penalties - WSJ.com - "The White House threatened China and other countries with trade and diplomatic action over corporate espionage as it cataloged more than a dozen cases of cyberattacks and commercial thefts at some of the U.S.'s biggest companies."

- The Obama Campaign's Technology Is a Force Multiplier - NYTimes.com

- Inside Team Romney’s whale of an IT meltdown | Ars Technica - A lot of detail about where the Romney data effort broke down.

Wednesday, February 20, 2013

Datamation Google+ Hangout on private/hybrid cloud

Fun discussion with myself, James Maguire, Andi Mann, Kurt Milne, Mark Thiele, and Sam Charrington. We talk about roadblocks to building a cloud, whether they lower costs, how to get started, and whether openness is important. (You can probably guess my take on that last point.)

Links for 02-20-2013

- Red Hat has BIG Big Data plans, but won't roll its own Hadoop • The Register - Typically uber-detailed look at announcement by Timothy Prickett Morgan.

- How to Set Up Soundflower for Audio Recording - LockerGnome

- Free Speech in the Era of Its Technological Amplification | MIT Technology Review

- “Djesus Uncrossed,” Grievance, and How Satire Actually Works | TIME.com - RT @poniewozik: New post: "Djesus Uncrossed," Grievance, and How Satire Actually Works

- Problems With Precision and Judgment, but Not Integrity, in Tesla Test - NYTimes.com - Seems a fair wrap-up by the NYT public editor

- (500) http://bit.l - RT @JWhitehurst: Glad you were about to cover the value of the open source subscription with @CIOMagazine Gordon, @ghaff - … ...

Monday, February 18, 2013

Links for 02-18-2013

- Winter chills limit range of the Tesla Model S electric car - Consumer Reports article shows same broad outlines of mileage drops as NYT piece

- Let's Blow Some Stuff Up | About | Wired.com - RT @chr1sa: Love this: @sdadich on reinventing @wired. Let's Blow Some Stuff Up

- Dash-cams: Russia's Last Hope For Civility And Survival On The Road - ANIMAL

- How To: Writing an Excellent Post-Event Wrap Up Report | Hawthorn Landings - RT @lhawthorn: New Blog Post: How To Write an Excellent Post Event Wrap Up Report #opensource #marketing #FOSS

- Intel Changes Itanium Plans, Jeopardizing HP's Unix Servers - Data Center - CRN India - Sounds like Kittson is going to be a pretty minor tweak to Poulson. via @CloudComputing3

- Maker's Schedule, Manager's Schedule - RT @asheshbadani: Inspired by , Wednesdays are Makers Days for the OpenShift team starting March!

- 6 Reasons to Pay for Open Source Software - open source, Big Switch Networks, Technology Topics | Open Source, Technology Topics, Red Hat, rhel, asterisk, Kno, OpenStack, red hat enterprise linux, Floodlight, digium, jboss, software - CIO - RT @annaucbo: 6 Reasons to Pay for Open Source Software (with @ghaff !) via @CIOonline ||

- Time Inc., the Unwanted Party Guest Being Pushed Out the Door - NYTimes.com - I've subscribed to Time for most of the past 35 years or so.

- Does Anybody Really Want an iWatch? -- Daily Intelligencer

Friday, February 15, 2013

Podcast: Redmonk's Donnie Berkholz talks Big Data

Donnie Berkholz describes himself as RedMonk's resident Ph.D.

He spent most of his career prior to RedMonk as a researcher in the biological sciences, where he did a huge amount of data analysis & visualization as well as scientific programming. He also developed and led the Gentoo Linux distribution.

In this podcast, he discusses the impact of Big Data, why you need models, how to get started in Big Data, and what we'll be saying about the whole space five years from now.

Listen to MP3 (0:09:37)

Listen to OGG (0:09:37)

Transcript:

Thursday, February 14, 2013

Podcast: Redmonk's Stephen O'Grady on developers, The New Kingmakers

I caught up with Stephen at Monkigras in London a couple of weeks ago. In this podcast, Stephen discusses the central thesis of his book and we spar a bit over the question of to what degree companies like Apple are really catering to the needs of developers (as opposed to the developers just going where the money is).

MP3 version (0:22:04)

OGG version (0:22:04)

Transcript: